Obstetric practice has a long tradition of recording and reporting outcomes. More senior readers may remember the large leather-bound labour ward ledgers into which details of every mother and birth were handwritten each day (Figure 1). In Victoria, those meticulous chronicles provided foundational information to Dr Marshall Allan, newly recruited to Melbourne from Brisbane in 1925, for his inquiry into Victorian obstetric outcomes, commissioned because of quality and safety concerns.1 Allan’s inquiry, and similar activities in New South Wales and Queensland, were the formal beginnings in Australia of obstetric outcome reporting being used to identify opportunities for improvements in care. Only a few years earlier, Allan had called for the creation of antenatal clinics as an essential measure to improve perinatal and maternal outcomes.2 In many ways, Allan and his work established outcome reporting practices that were the forerunner of what would become Victoria’s Consultative Council on Obstetric and Paediatric Mortality and Morbidity (CCOPMM), established in 1962. In essence, Allan asked – and CCOPMM continues to ask – ‘how good are we?’

Figure 1. Excerpt of a labour ward birth register, c1870.

In more recent times, as with other branches of healthcare, pregnancy outcome reporting has evolved to embrace performance indicators. There are a number of key features that distinguish performance reporting from traditional outcome reporting. First, performance indicators are, typically, reported at a more granular level – by health service or hospital rather than by state or nation. Second, they provide comparative, so-called benchmarking, data. This has been traditionally done using de-identified data so that an individual hospital knows only its own identity but not that of others, such as in the reports from Health Roundtable or Women’s Healthcare Australasia (WHA). However, this is changing. An increasing number of agencies, including government, are seeking identified reporting. Benchmarking allows individual hospitals to ask not only ‘how good are we?’ but also ‘how good are we compared to others?’ For example, in Victoria the Perinatal Services Performance Indicators (PSPI) has reported comparative clinical performance data by individual hospital on over ten indicators for almost 15 years.3 New Zealand has had a similar publicly released report since 2012, the New Zealand Maternity Clinical Indicators.4 Allan would be proud. He argued for central oversight of health performance, finding that many of the problems in 1920s Victoria were because ‘there is no central authority controlling the health affairs of the State.’5 Very similar findings to Allan’s were made nearly a century later in Targeting Zero, a report of Victorian public hospital clinical governance, itself triggered by a maternity service failure.6

However, being government-led in itself doesn’t ensure that performance reporting leads to improved outcomes. Whether at national, state or territory, or health service level, there can be lack of clarity about how such data drive improvement. So often in healthcare we are surrounded by data that don’t seem useful to the clinician or the consumer – data reporting almost for the sake of reporting. Or worse, for compliance. How are patient outcomes improved by that? As the German philosopher Goethe opined, ‘Knowing is not enough. We must apply.’

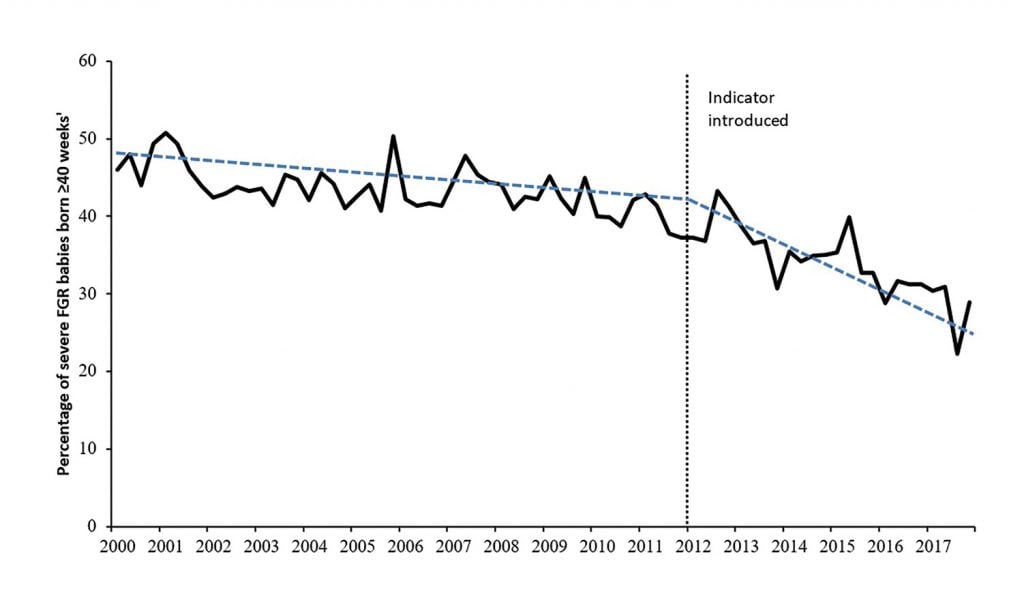

Over recent years, Safer Care Victoria, the state’s healthcare improvement agency, has used performance reporting at the health service level – as published in the PSPI – as one of its key approaches to identifying opportunities for improved care. Central to this approach is engagement with clinicians. From the very beginnings of the PSPI, clinicians decided what data were relevant and how they should be reported. Government then ‘lifts the phone’ to services where outcomes are poorer than expected, offering insights from other services and support for improvement. But, and this is important, the improvement activities are locally designed and implemented. This has yielded genuine and trusted partnerships between clinicians, health services, government, and, more recently, consumers. Such an approach appears to be working – at least for some indicators. For example, by publicly reporting a measure designed to improve the detection of severe fetal growth restriction (FGR) there has been a four-fold reduction in the rate of babies with severe FGR born after 40 weeks gestation (Figure 2).7 As a result, the rate of stillbirth among these babies has fallen by 24%.8 Similarly, a longstanding indicator in the PSPI was the rate of antenatal steroid administration before 34 weeks gestation. Performance across Victorian public maternity services increased from 83% in 2001 to 90% in 2008, such that the indicator was retired in 2009 because clinicians advised that it had reached a ceiling.9

Figure 2. Improvement in severe FGR detection in Victoria (adapted from Selvaratnam et al. BJOG. 2019;127:581-9).

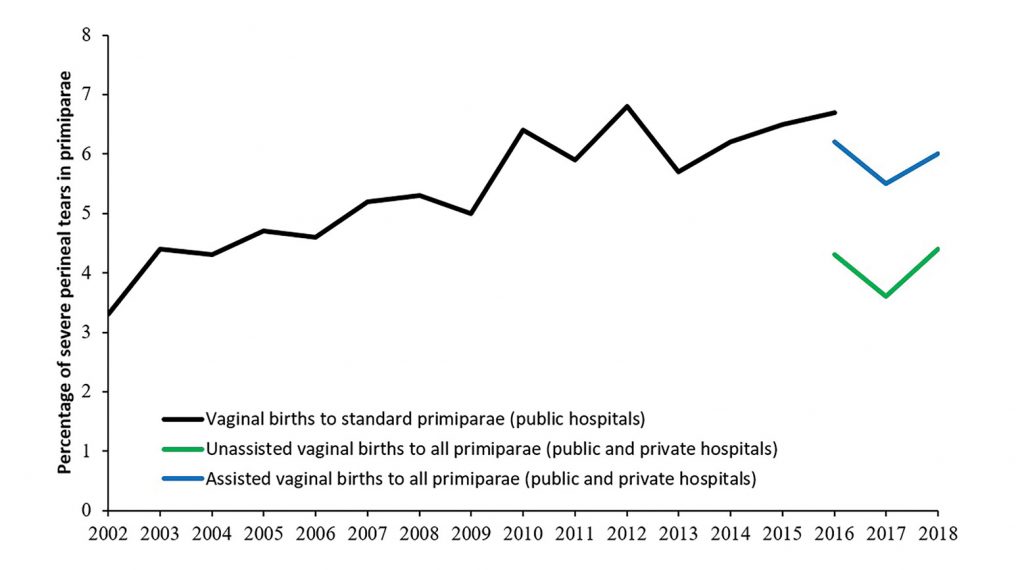

In more recent times, clinicians have highlighted the need to reduce the rate of obstetric anal sphincter injury (OASI). The rate of third- and fourth-degree tears in standard primiparae has always been reported in the PSPI, but the rate has been rising, not falling (Figure 3). Clinicians advised that the performance indicator wasn’t useful, at least in its current form. They wanted better insights into who the women were that were sustaining OASI so that interventions could be better targeted. For the last three years, the PSPI performance indicator for third- and fourth-degree tears has separately reported the rate for primiparae who have had an unassisted or assisted vaginal birth (Figure 3). This has made more visible the need to reduce OASI among women having an assisted vaginal birth. Applying this knowledge, co-funded by Safer Care Victoria, 10 Victorian hospitals, along with 18 other hospitals in New South Wales, Queensland, South Australia and Western Australia, participated in a Women’s Healthcare Australasia and Clinical Excellence Commission (WHA-CEC) national improvement collaborative. The aim of the collaborative was to reduce the rate of OASI by 20%. This introduces the third question that, together with ‘how good are we?’ and ‘how good are we compared to others?’, underpins successful quality improvement – ‘how good do we want to be?’ One of the hallmarks of high performing health services is that they each have a vision for their improvement with clear, measurable goals.10 With an improvement goal set, the WHA-CEC collaborative saw an 18% reduction in OASI in women having an assisted vaginal birth.11 Almost 500 fewer women sustained a third- or fourth-degree perineal tear in the participating hospitals.12 This work is now being spread more widely across other Victorian maternity services, led by consumers and clinicians, supported by government.

Figure 3. Rates of third- and fourth-degree tears in Victoria (adapted from PSPI reports)

So, what are some lessons for the successful use of performance reporting to improve outcomes? First, to be clear about the purpose. It should be for performance improvement, not compliance or regulation. Second, the indicators should be meaningful to those for whom they should matter most. A short list of measures should represent good proxies for outcomes of importance and give clear insights to both consumers and clinicians of what actions or interventions might improve care. This requires the involvement of consumers and clinicians in the very design of the measures. Next, performance reports should identify hospitals or health services by name and be publicly accessible. This allows transparent benchmarking, makes variation in outcomes more visible and accessible to intervention, and, best of all, harnesses the innate desire of clinicians to be as good as they can be. Indeed, we have argued that transparent reporting of outcomes linked to government action may be all that is required for improvement.13 Benchmarking also allows health services and government to set evidence-informed goals for improvement, like the 20% reduction in OASI or the 20% reduction in stillbirth recently established by the Stillbirth Centre of Excellence (CRE) for the Safer Baby Bundle improvement collaborative. This clear and measurable goal to reduce the rate of stillbirth has already been adopted by Safer Care Victoria, the NSW Clinical Excellence Commission, and Clinical Excellence Queensland. This takes us to government action. Central to the improvement agenda is the need for government to not approach underperformance punitively, but rather to act as an enabler of change and improvement. To be successful, improvement initiatives need to be designed by those who are providing the care. Formal improvement collaboratives, such as the WHA-CEC perineal tear collaborative or the Stillbirth CRE Safer Baby Bundle, begin with clinicians and consumers advising on what interventions are likely to work in their environment. Government then plays a critical role in leadership and coordination of shared learning and collaboration across health services, supporting improvement activities and providing training in formal improvement methods.

Another, less visible, benefit of central government oversight is that any unintended consequences of improvement initiatives can be detected quickly. For example, the improved detection of severe FGR in Victoria has come at a cost. The cost has been a four-fold increase in the rate of early delivery for normal grown babies and a doubling in the rate of admission of these babies to the neonatal intensive care unit.14 Such unintended consequences are often not readily apparent at an individual health service level but, due to larger numbers at a system level, they are visible to government. This allows the rapid development and introduction of compensating measures – so-called balance measures – whose purpose is to monitor and mitigate unintended consequences. This year, as part of routine reporting in the PSPI, Victorian hospitals will be provided a new balance measure to monitor the unintended ‘collateral’ harm in their detection of FGR. When reported alongside the existing measure of severe FGR detection, hospitals will be able to see both their sensitivity and specificity of FGR detection in tandem. And they will be able to compare themselves to others, setting new goals for improvement. The next step will be for Safer Care Victoria to identify high performing hospitals and share their strategies with others.

In summary, we have provided a general framework for how performance reporting can drive purposeful improvement. It is not just about reporting outcomes. You can’t fatten a cow by weighing it. It is through the collaboration of all involved in maternity care – consumers (first and foremost), clinicians, health service executives, improvement experts, and government – that performance reporting can be harnessed to target and drive improvement, delivering better and safer births for all women and their babies. This is all just an evolution of the work that Marshall Allan and his colleagues first formally began almost a century ago.

References

- Allan R. Report on Maternal Mortality and Morbidity in the State of Victoria. Med J Aust. 1928;6:668-85.

- Allan R. The need for ante-natal clinics. Med J Aust. 1922;2:53-4.

- Hunt RW, Ryan-Atwood TE, Davey M-A, et al. Victorian perinatal services performance indicators 2018–19. Melbourne: Safer Care Victoria, Victorian Government; 2019. Available from: bettersafercare.vic.gov.au/sites/default/files/2019-02/Vic%20perinatal%20services%20performance%20indicators%202017-18.pdf.

- Ministry of Health. New Zealand Maternity Clinical Indicators 2017. Wellington: Ministry of Health; 2019. Available from: www.health.govt.nz/system/files/documents/publications/new-zealand-maternity-clinical-indicators-2017-jul19.pdf.

- Allan R. Report on Maternal Mortality and Morbidity in the State of Victoria. Med J Aust. 1928;6:668-85.

- Duckett S, Cuddihy M, Newnham H. Targeting zero: supporting the Victorian hospital system to eliminate avoidable harm and strengthen quality of care. Report of the Review of Hospital Safety and Quality Assurance in Victoria. Melbourne: State Government of Victoria Department of Health and Human Services; 2016. Available from: www.dhhs.vic.gov.au/publications/targeting-zero-review-hospital-safety-and-quality-assurance-victoria.

- Selvaratnam RJ, Davey M-A, Anil S, et al. Does public reporting of the detection of fetal growth restriction improve clinical outcomes: a retrospective cohort study. BJOG. 2019;127:581-9.

- Selvaratnam RJ, Davey M-A, Anil S, et al. Does public reporting of the detection of fetal growth restriction improve clinical outcomes: a retrospective cohort study. BJOG. 2019;127:581-9.

- Department of Health. Victorian maternity services performance indicators 2009-10; 2012. Available from: health.vic.gov.au/about/publications/researchandreports/Victorian%20maternity%20service%20performance%20indicators%202009-2010.

- Ham C, Berwick D, Dixon J. Improving quality in the England NHS: A strategy for action. England: The King’s Fund; 2016. Available from: www.kingsfund.org.uk/sites/default/files/field/field_publication_file/Improving-quality-Kings-Fund-February-2016.pdf.

- Women’s Healthcare Australasia. Reducing Harm from Perineal Tears: Celebrating Success WHA National Collaborative. 2020. Available from: women.wcha.asn.au/collaborative/evaluation.

- Women’s Healthcare Australasia. Reducing Harm from Perineal Tears: Celebrating Success WHA National Collaborative. 2020. Available from: women.wcha.asn.au/collaborative/evaluation.

- Selvaratnam RJ, Davey M-A, Anil S, et al. Does public reporting of the detection of fetal growth restriction improve clinical outcomes: a retrospective cohort study. BJOG. 2019;127:581-9.

- Selvaratnam RJ, Davey M-A, Anil S, et al. Does public reporting of the detection of fetal growth restriction improve clinical outcomes: a retrospective cohort study. BJOG. 2019;127:581-9.

Leave a Reply